There has been a lot of confusion about image resolution, and on different approaches and use cases, and a problem get’s pretty messy as you go down a rabbit hole.

I have been asked a question what resolution is right one, and should one render CG in a 4k or a HD? Should one use a 4k or 8k camera? A question is simple, and one could think that an answer is also simple. But it isn’t at all. For explaining this, I will have to compare computer generated images with those shoot with a real physical camera (analog or digital). For this purpose, as a CGI generated image I will use my own Deckard Render for Unity, renderer system made for simulating real/physical camera and film behavior while using ultra-fast 3D acceleration based on Unity engine. To be able to understand more this question, lets start from defining what is a resolution. Resolution is count of pixels, but this doesn’t relate much to a real world without knowing what is our support. Mostly, we would say that a resolution defines sharpness of an image? Impossibility to see pixels in digital reproduction? Yes. But, when just counting pixels, we actually don’t have any means on understanding an actual resolution. From this standpoint, lets presume that a term RESOLUTION means: capacity of resolving fine details in an image. This should be pretty straight forward, no?

When we look into a dictionary, resolution says something else:

/rɛzəˈluːʃ(ə)n/ RESOLUTION – A firm decision to do or not to do something.

I will come back to this phrase later on. For now lets stick on phrase “resolving fine details in an image”

We have to understand that image resolves at more stages (and here I will use the analogy of real digital camera vs. CG generated image (Deckard/Unity)

- Optical stage (in a real camera, this would happen in a lens, in Deckard Render, this happens in it’s own physical simulation of a lens)

- Sampling stage (in real camera, this would happen on a sensor, in Deckard Render this happens internally in a software)

- Capturing stage (writing to a video file)

Reproduction stage (reading a file and showing it on a monitor or a theatrical screen).Final image resolution (ability to solve fine details) depends on all those stages, and it’s enough that one stage isn’t perfect to get a drop in image resolution. In physical photography (or still image print) resolution is a “mostly” a simple thing. You know what is your final support size, and you just print at a requested resolution in respect to DPI . In motion picture, this gets pretty complex really fast.

NOW, LETS TRY TO COMPARE CG AND REAL CAMERA AT THOSE STAGES

Optical stage in camera – a capacity to resolve resolution is based mostly on your lens and film/sensor format. There is no such a thing as a perfect lens, and any lens will introduce some kind of physical blurring (that happens due to unperfects lens surface and other characteristics that are more physically complex), depth of field, and motion of a camera (motion blur). In Deckard, we have a camera that mostly has a lens that is capable of doing DOF, but it doesn’t have any lens imperfections or aberrations.

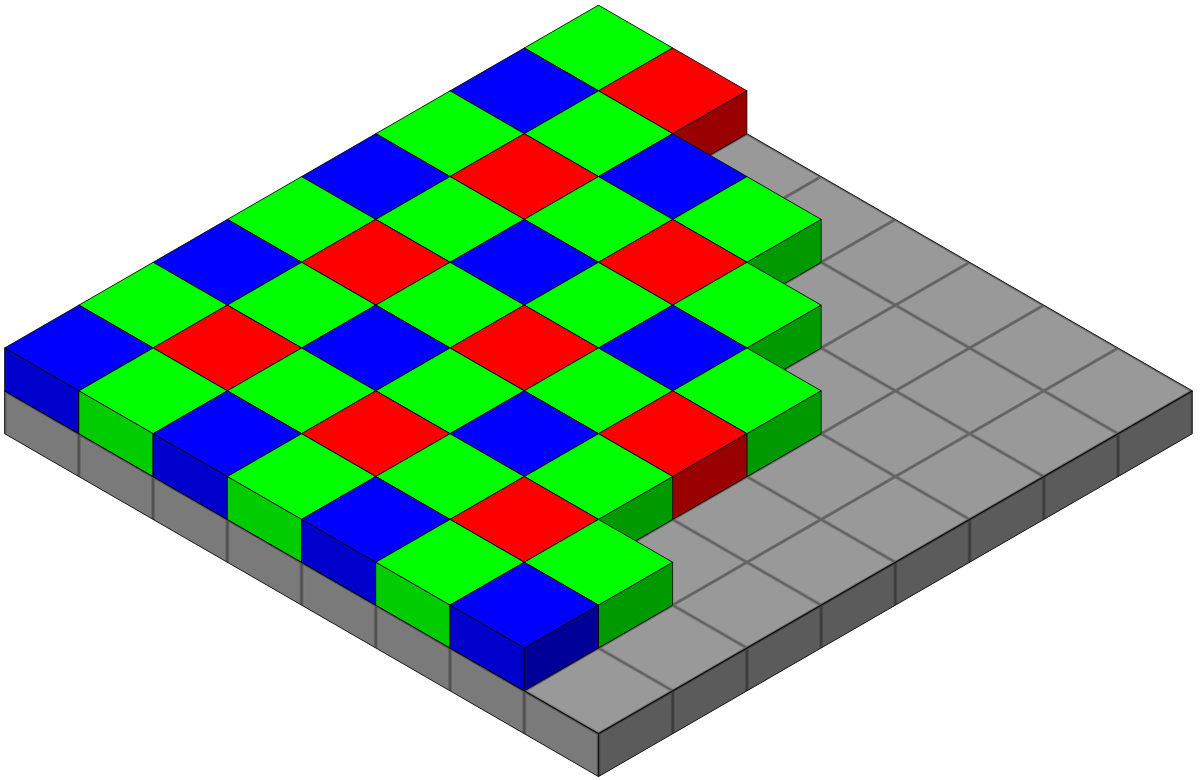

Sampling stage in digital camera: This is where things get tricky in a digital camera. Most CMOS cameras actually doesn’t have a fixed resolution (even if they state values like 4K or more). As a Bayer sensor almost always consists of a ‘pixels’ that actually aren’t real pixels, but are so called photo-sites.

(note: some cameras as JVC models state fullHD X 3, and this means that they have 3 photo sites for each color pixel, other cameras use 3CCD, and then some use alternative pixel layouts (like Black magic Ursa 12K that use even white photo sites))

Each photo site can sample only one color (R, G or B). So you need actually at least 3 photo sites to sample one color. This means that a sensor will be able to sample a full resolution only when your real scene is made of black, grays and whites. This also means, that any color that isn’t in a grayscale, will have it’s own relative resolution that less that 1:1. For example, any full red or blue color will be sampled at 1/4th of a resolution. Any green will be sampled at 1/2 of a resolution (guess why we use green screens?).

If you have a CMOS camera, and try putting a red filter in front of a lens (or filming under red light) and you will instantly notice resolution downgrade of a third of an original resolution. Onto this, you should add problems with a noise – most cameras will use some kind of a denoiser that actually lowers resolution even more. Some will feature sharpeners, but they mostly only give a perceptive gain in crispiness, while they are actually downgrading resolution (I know that this seems counter intuitive, but it’s a pretty complex thing to explain here).

- Sampling stage in Deckard: It is done without any problems related to image samplers (sensors) in real camera. Actually, I had to add some artificial noise to make an image look and behave more organically. Also, it captures all colors at a same resolution. When using Deckard, you are actually getting a lossless resolution at this stage.-Capturing Stage in digital camera: This pretty depends on what file format are we using. If we aren’t using RAW, we will have some resolution downgrade due to Chroma subsampling (the practice of encoding images by implementing less resolution for chroma information than for luma information)

More about that here: https://en.wikipedia.org/wiki/Chroma_subsampling

- Capturing Stage in Deckard: This depends on format and compression. Capturing here is done or in full floating point RGB (when using EXR), in PNG (full RGB) or in a JPG (that uses variable subsampling).Delivery of a file is a different beast: you can deliver in mp4, and depending on your quality (bitrate) settings you will get a better or worse image. One of the tricks used by many, when delivering to youtube is to record in FullHD, but then just resample video to 4K and export it like 4K. This is something used also a lot even in cinema.

Should you care too much about resolution?

It really depends on your content type. For example, a scenes that are static and sharp could be rendered in higher resolution. Those with lot’s of motion can be rendered in lower resolution. Images with shallow DOF can be rendered also at lower resolutions and then scaled up for final delivery. One thing to know: A HD resolution rendered in Deckard Render is more like a 4K resolution captured with a professional digital camera – so if you have to match content from a real camera, don’t try to match resolutions – as a Deckard actually has better optical resolution than a real camera. (this is also why I have introduced Use Half Resolution).Last but not least: never compare a resolution concept that we have in gaming that “a bigger resolution (and framerate) is better”. Gaming is a different medium than cinema where “quality values” are actually inverted. In a gaming you want a bigger resolution as it helps solving aliasing issues. You also want better framerates because they help solve the temporal aliasing issues and all this to be able to reduce latency, real-time fluidity and precision. In cinema this is something that isn’t wanted and desired. We are still using 24FPS as a standard (and this will continue to be a standard), and most of a movies done on film doesn’t have a resolution that is greater than FullHD (actually, many analog movies have much smaller actual resolution).

In this article I didn’t go deep into a temporal sampling, but this is also an important thing, because sampling happens in space and in time. Thus, framerate also influences apparent sharpness of an image. More frames you render per second, more sharpness you achieve. But is this what you want?

And here we are coming to a definition posted on first part of this article that a resolution is a firm decision to do or not to do something.